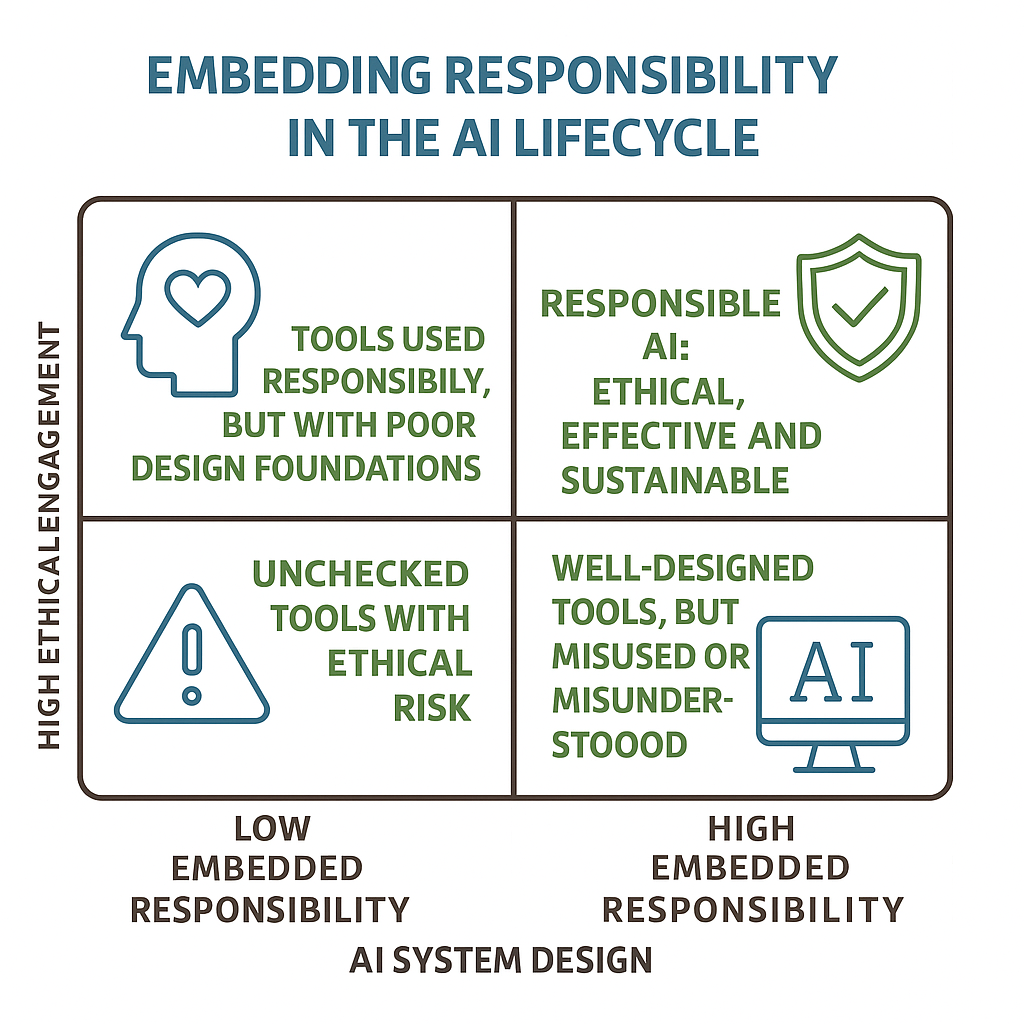

Equally important is the concept of responsible AI: the active process of using AI technologies ethically and equitably. This wraps together and contextualises much of what has been covered in this section of the course, so far. Beyond the immediate results and conclusions drawn from running an AI model, responsibility in its use should anticipate the broader, environmental, social and institutional impacts of a particular AI system. For instance, if a model requires significant computational power, will it become accessible to others? If it performs well on one population, will it generalise across to others? Responsible AI asks the user to design both for effectiveness, together with fairness, sustainability and long-term, wider human benefit.

Methods to ensure you genereate responsible AI

Responsible AI tools can assist researchers in operationalising all of these principles. Thus – as aforementioned – an AI model should incorporate as much the capability to deliver the task at hand, as it does the capability to assess its own fairness and explainability. Beyond this, a responsible use of AI would investigate lines of counterfactual analysis, together with tools for tracking data lineage, authenticity and auditing decisions or evaluating outcomes, based on these. By embedding these important checking steps directly into a model development pipeline, researchers can monitor, evaluate and report the ethical dimensions of their AI models, right from the start.

Responsibility and trustworthiness of AI models are, therefore, as much features of the model, as they are commitments from the user. A commitment to develop AI systems that serve human progress with diligence ensuring a broad gamut of well-distributed, reliable, and accountable processes and outcomes.