When it comes to everyday tasks, AI has proven particularly useful in increasing productivity by handling repetitive operations. By extension, AI also has great potential in managing, processing and interpreting scientific data. It may automate tasks that were once the domain of early-career researchers: image analysis, data annotation, literature reviews, and even hypothesis generation and brainstorming. But with the handling of sensitive data – even for these simpler tasks – comes the need for secure data storage, responsible data sharing and awareness of cyberattack vulnerabilities. Tasks as simple as digitising lab notebooks or developing AI-based protocol assistants, must consider robust security measures; preventing data breaches, especially when working with unpublished or vulnerable and sensitive data.

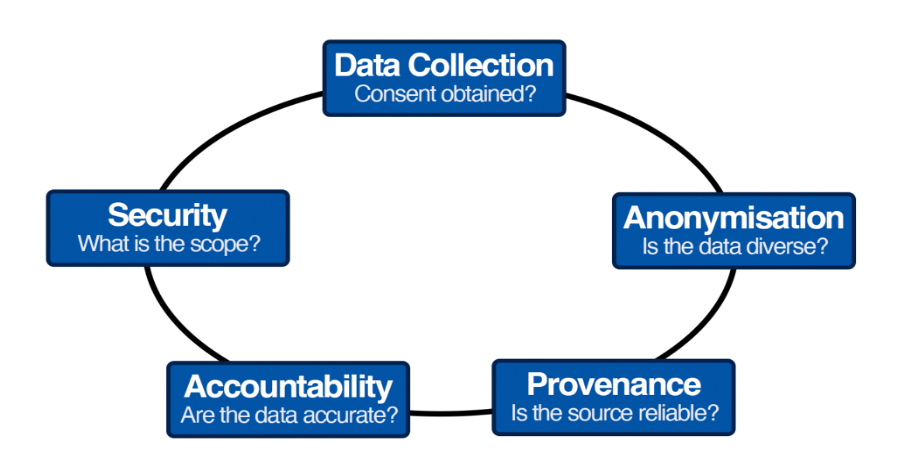

The circle of privacy

This is where developing AI tools, pipelines and models internally becomes attractive and important. With a user-limited scope that extends no further than the walls of the laboratory or institute, concerns surrounding security are greatly minimised. This is not only about compliance with data protection laws (such as GDPR in the UK and EU), but about maintaining the trust of collaborators, funders, and the public.